Move Large Files Fast: Overcoming the challenge of transferring huge unstructured data sets

From the first data to the data explosion

Humans have been generating data since we first began writing thousands of years ago. Independently developed in at least two places (Ancient Sumer around 3200 BCE and Mesoamerica around 600 BCE), the first kinds of writing mostly recorded trade transactions, only later becoming a medium for poetry and lore.

The pace of information and data generation began to pick up with the invention of the printing press around 1450. But, according to Wesleyan University Librarian and scholar Fremont Ryder, it wasn’t until the 1940s that its expansion warranted the new phrase “information explosion” (Oxford English Dictionary).

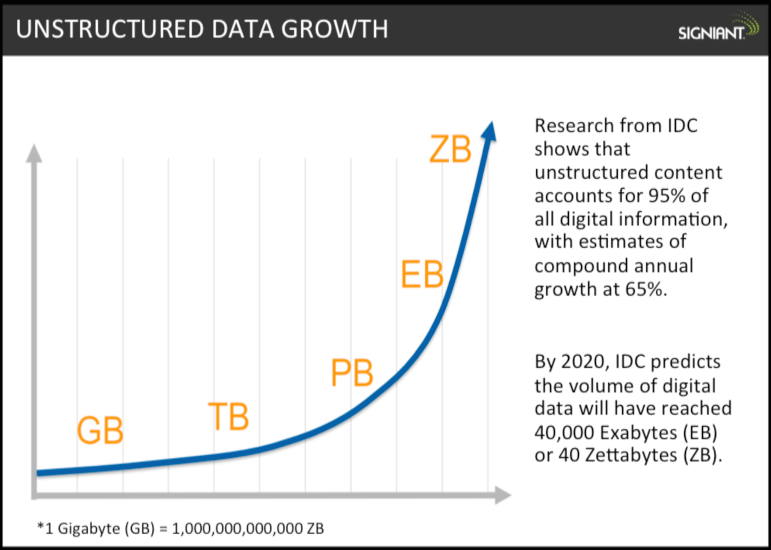

However, the information explosion of those days was a mere sparkle compared to now. The International Data Corporation (IDC) estimated that by 2006 we’d already created 161 Exabytes of data, “3 million times the amount of information contained in all the books ever written.” And, by 2020, IDC predicts that the volume of world’s digital data will have reached 44 Zettabytes, with the largest subset (up to 95%) being unstructured data such as videos, photos, scientific research and medical records.

At the same time that unstructured data volumes are truly exploding, there’s an increasing need to store, send, and share those volumes with collaborators around the world. But traditional methods for transferring large files like FTP are increasingly problematic as files get bigger and distances greater.

Bandwidth vs. Latency

Many people, even very technically savvy people, assume that the main problem with sending large files and unstructured data sets over IP networks is bandwidth capacity. It’s no wonder, Internet providers are notorious for claiming that purchasing more bandwidth will speed up the transmission of large files over long distances. But with protocols like FTP and HTTP, which are built on top of TCP, the major inhibitor is latency. And they are limited to only utilizing a relatively small amount of bandwidth, so even if bandwidth were the main problem, purchasing more would not speed up transmissions.

Signiant is a pioneer in the fast transfer of large files over public and private IP networks and has created Emmy award-winning technologies recognized as critical for the business of media production. While other data-intensive industries are now adopting large file transfer software, Media & Entertainment was the first that needed to move large files over the Internet back in early 2000s as they transitioned from tape to file-based workflows. Signiant grew up handling their global, large video file transfers and high security, tight deadline needs.

More industries are now dealing with growing file sizes and unstructured data than ever before. Indeed “information explosions” are happening everywhere. If you’re working in one of them, learn more about Signiant’s high speed file transfer solutions.