Beyond Entertainment: Enterprise Big Data Modeling with Virtual Reality

Virtual Reality is completely changing our experience of entertainment and education media. No longer a passive observer, we can quite literally step into a scene, move around, and interact with whatever is happening there. That’s not only fun, it could greatly improve both the quality of our entertainment and the way we learn.

However, VR’s business applications get far less attention than they deserve, especially from large corporations with Big Data initiatives. Virtual Reality data modeling can cut through the complexity of interpreting Big Data, leading to faster and more useful insights. But before we get into how, let’s consider the current state of Big Data in the enterprise.

The promise and challenge of Big Data analytics

The 2017 NewVantage Partners Big Data Executive Survey is revealing. It’s their fifth annual survey targeting senior Fortune 1000 business and technology decision-makers. “A strong plurality of executives, 48.4%, report that their firms have realized measurable benefits as a result of Big Data initiatives,” says the survey. “A remarkable 80.7% of executives characterize their Big Data investments as successful, with 21% of executives declaring Big Data to have been disruptive or transformational for their firm.”

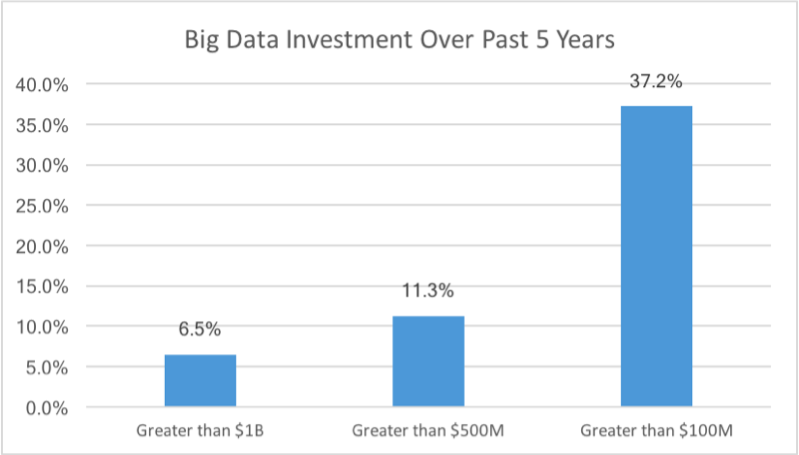

The results point to Big Data firmly resting in the mainstream, and they are backing that belief with large investments: “37.2% of executives report their organizations have invested more than $100MM on Big Data initiative within the past 5 years, with 6.5% investing over $1B.”

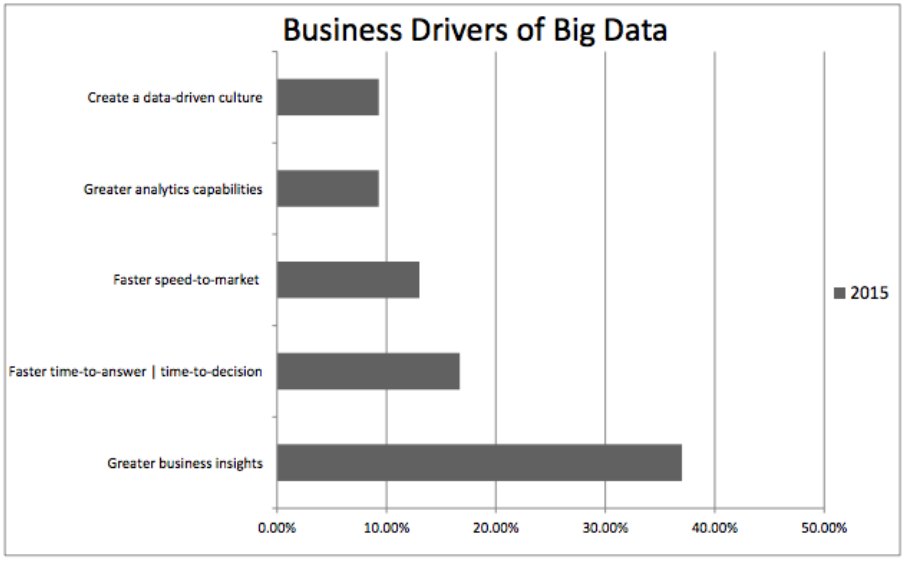

There are numerous reasons large enterprises are investing so heavily. Fundamentally, the need to shift to a more “data-driven” culture is paramount for long-term viability and success, bringing in greater analytics capabilities, faster speed-to-market, faster decision-making capacities and greater business insight. However, transforming legacy processes within such large companies in favor of Big Data intelligence can be challenging.

Transforming legacy infrastructures and processes to support Big Data

In response to a prior year’s NVP survey, MIT Sloan published a case study on American Express and the results of their new technology center dedicated to Big Data, cloud computing and mobile infrastructure.

“Mainstream companies will drive the future of Big Data investment. But they must demonstrate a willingness and flexibility to tackle their complex legacy environments and data infrastructure,” said MIT Sloan’s Randy Bean.

“For those firms that make the commitment, as evidence by the example of American Express, the payback is likely to be high. Their ability to compete for customer success in the coming years may depend on it.”

Large companies realize the critical value of Big Data. Transforming their infrastructure and processes will be key to how successfully they’ll be able to use it.

Gaining actionable insight through the noise of Big Data

Developing effective Big Data applications in large companies is a complicated process, but two overarching challenges have continued to stall its development: Moving it around for access to storage and analytic tools (either on-premises or in the cloud) and the interpretation of analytic results. The later is where Virtual Reality could have a huge impact.

“While bar graphs and pie charts do their job of providing headline figures, today’s Big Data projects require a far more granular method of presentation if they are going to tell the full story,” said Bernard Marr in a recent Forbes article.

“There are inherent limitations in the amount of data that can be absorbed through the human eye from a flat computer screen.

“By immersing the user in a digitally created space with a 360-degree field of vision and simulated movement in three dimensions, it should be possible to greatly increase the bandwidth of data available to our brains.”

Nate Silver, statistician and founder of the data-driven journalism site FiveThirtyEight (now owned by ESPN), agreed that “extracting the signal from the noise” is still a big problem. In last year’s HP Big Data Conference in Boston, he covered several challenges of Big Data, including the increasing complexity of it.

Apparently, the more data you have, the harder it can be to find value in it. VR modeling can greatly reduce the noise in the final and critical visualization phase of Big Data initiatives. By increasing the brain’s data bandwidth, conclusions can be drawn much faster and with far more accuracy.

Accessing agile storage and analytic tools for Big Data

Another key point in Silver’s HP Big Data Conference speech has to do with moving and storing Big Data: “Where do you put it all?” Many companies are looking to utilize cloud object storage, which is ideal for storing large unstructured data and providing access to cloud-based analytics.

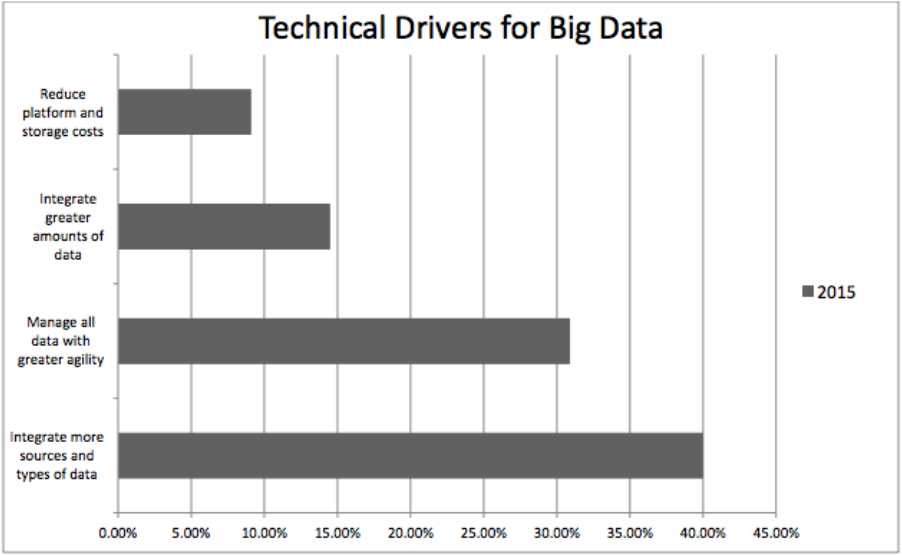

As a scalable and much more flexible storage choice, the cloud will likely be the answer for over 30% of firms in NVP’s survey who cited “the availability of new data management approaches (e.g. Hadoop) that provide greater agility and nimbleness” as a top priority.

However, most large enterprises have legacy investment in on-premises data centers. One solution is to decommission and repurpose or sell off data center property to free up capital for cloud investment, a move many large companies are taking.

With cloud-based Big Data analytics services on the rise for all kinds of applications, the cloud will likely be the home for most Big Data.

Latency and data access

But as Silver reminded us, “can you deal with latency and how often do you need to access data?”

This all depends on having acceleration technology that can handle long-haul transfers over IP networks, and a cloud architecture that supports data ingestion directly from globally distributed offices.

Gathering Big Data is the first step and includes figuring out where it put it and how to access it. However, being able to glean actionable insight could require the super-human, data-bandwidth boost of Virtual Reality.

But hey, it’s always nice when work starts feeling more like entertainment.