Send Large Files: A Guide for Media & Entertainment Professionals

What is our current definition of a “large” digital file? Defining what a large file is certainly has not remained static. What was an unwieldy large file five years ago might be considered small today. For the general public and typical consumers, files ranging from 500MB to 2GB might be considered large. However, in fields such as media and entertainment, life science, and other data-intensive businesses, such file sizes are comparatively small.

Media file size depends on content length, resolution, amount of compression, and bit rate. The longer the content, the more resolution it has, the less video compression, and the higher the bit rate — the larger the file. But large is also relative to the ability to use, move, transform and play a file.

In the media and entertainment industry, where video acquisition and display technology advance rapidly, the concept of “large” shifts. And while the idea of what a large file constantly changes — from SD to 4K, DV to RAW — the challenges of preparing, securing and sending large files remain.

Understanding the History of Media Files and Transfers

Hand-carrying analog film containers defined content exchange from the advent of cinema in 1895 until the dawn of the video digital age. With the proliferation of computers in the 1970s and 80s, files — word processing programs, games, documents — were stored and moved by external disk. Networked computers and the birth of the Internet brought electronic messages, and soon after, small data files could be sent over the Internet, albeit slowly.

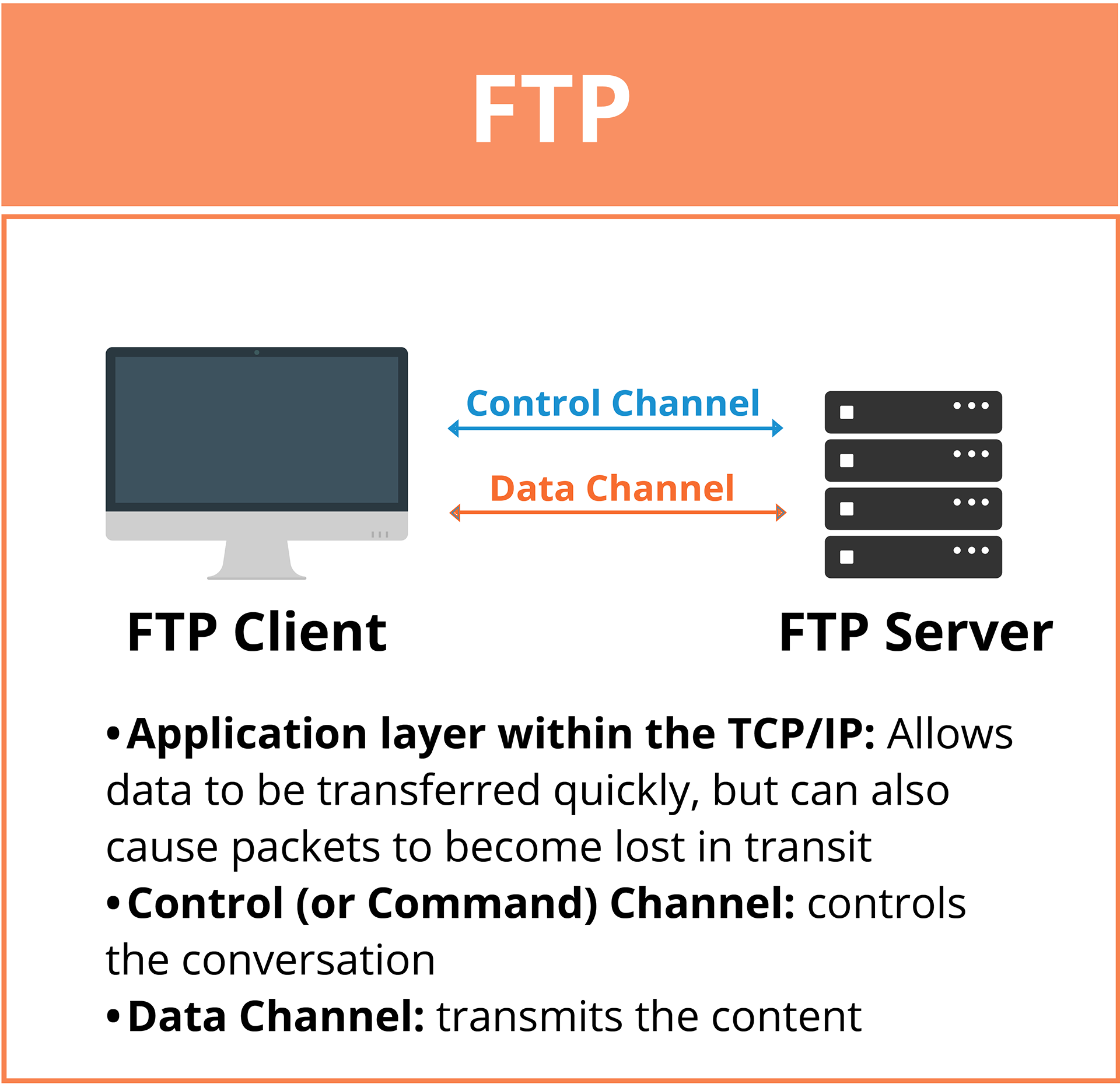

As computers and network infrastructures advanced, so did the ability to send large files electronically and through the Internet. Invented in 1972, File Transfer Protocol (FTP) emerged as the most efficient online file transfer method by the mid-1980s.

The 1980s and early 1990s introduced non-linear video editing, like Avid, and computer software programs, like Adobe Photoshop and After Effects, for electronic graphical content creation for media production. With Apple QuickTime released in 1991, providing a more consumer-oriented file format, files became an integral part of the media and entertainment workflow. While FTP was used for electronic file transfer, the predominant delivery method was by drive or disk.

The 2000s saw a rapid increase in file-based acquisition and delivery with the introduction of Sony’s XDCAM format; theatrical cinemas began moving from physical film reels to digital cinema packages (DCP); and 2011’s Fukushima earthquake and tsunami destruction of HDCAM tape factories accelerated file-based acquisition and delivery as tapes became scarce.

Companies started utilizing cloud-based file transfer and storage services like Amazon S3, Google Drive, and Dropbox. With the growing demand for higher quality content, especially with 4K resolution, advanced digital file transfer technologies that could send large files fast, consistent and secure over the Internet emerged from companies like Aspera, Signiant, and others.

The evolution of digital file transfer continues to progress, driven by advancements in technology, changing consumer expectations, innovation, and the need for efficient and secure content distribution.

The Anatomy of a Video File Transfer

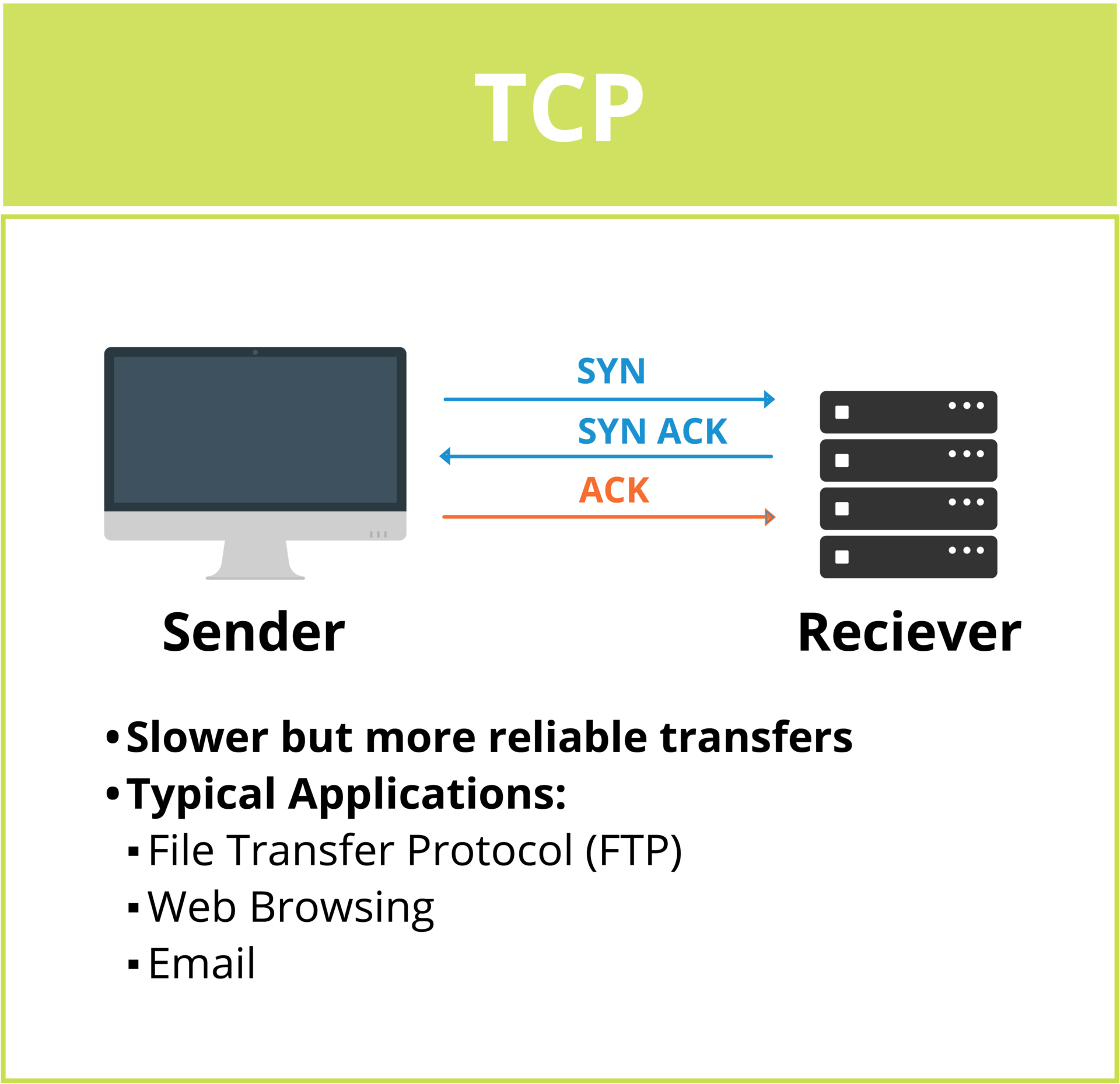

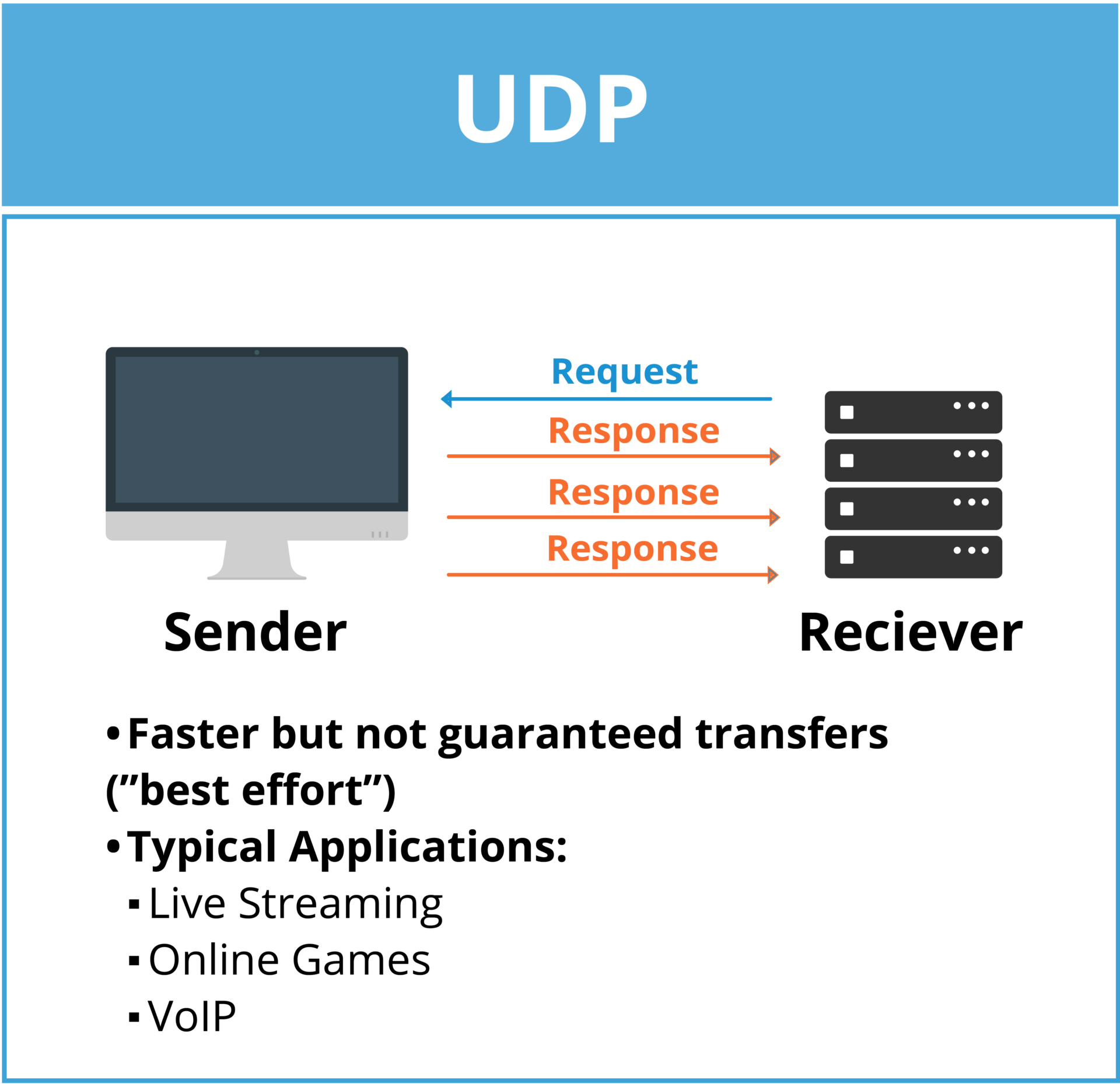

Video file transfer is governed by a communications protocol, a set of rules that define how information is transmitted between computers in a network. Transmission control protocol (TCP), user datagram protocol (UDP) and file transfer protocol (FTP), are examples of common standards used today for sending large files.

TCP Transfers

TCP establishes a connection between a source and its destination before it transmits data, which remains live until communication begins. TCP transfers data in a carefully controlled sequence of packets. As each packet is received at the destination, an acknowledgment is returned to the sender. If the sender does not receive the acknowledgment in a certain period of time, it simply sends the packet again. This can lead to more considerable overhead and greater use of bandwidth.

UDP Transfers

UDP is a standardized method for transferring data between two computers in a network. Compared to other protocols, UDP sends data packets directly to a target computer without establishing a connection first, indicating the packet order, or checking whether they arrived as intended.

FTP transfers

FTP is an application layer that runs on top of TCP/IP protocol that relies on two communication channels between the client and server: a command channel for controlling the conversation and a data channel for transmitting the content. The end user’s computer acts as the local host. A second computer, usually a server, acts as a remote host. Servers must be set up to run FTP services and the client must install FTP software. Once logged on to an FTP server, a client can upload, download, delete, rename, move and copy files. FTP sessions work in active and passive modes.

This allows data to be transferred between two computers but can also cause packets to become lost in transit, causing delays — these delays get exacerbated when working with very large files and transferring over long distances and congested networks.

Challenges in Sending Large Files

There are several challenges and considerations when sending large files and datasets. A series of connections and technology can significantly impact performance to optimize transport speed.

Origin

A file transfer immediately starts with several potential congestion or bottleneck points, each impacting the efficiency of sending large files.

The storage where the file resides is the first point. How fast the storage’s read/write ability will affect how fast it can be sent. An old USB thumb drive will not be able to leave the USB storage than an internal SSD drive.

The connections from one device to another will also affect the speed of the port (and cable type) on either end, which has limited ability to pass data; it will be throttled by the slower speed of the two. A USB 2 cable cannot pass as much information as a Thunderbolt 3 cable.

On-Premises Network Configurations

On-premises network hardware and configurations are the next, where the file can pass through at maximum speed or be bogged down.

The physical connections, again, are points of potential inefficiencies — ports, cables, and hardware.

Network activity contributes to filling up bandwidth and slowing down data movement. With traditional protocols like TCP (transfer control protocol), data traveling short distances uses disproportionately higher bandwidth than data travelling long distances on the same network.

Bandwidth allocation is a common IT configuration. Most organizations partition their bandwidth for other operations. So, you may have a 100g/s pipe, but transfers could have only a 100/mbps portion of that.

Internet protocol configurations by IT administrators could provide faster transfer through their own UDP configurations; however, these will be subject to more file corruption and loss of information.

Network Conditions

Public Internet speed and reliability vary greatly depending on location, infrastructure quality, and network traffic. Rural or remote areas often experience slower speeds and less reliable connections. It also relies heavily on physical infrastructure like underground cables, data centers, and network nodes. Damage to this infrastructure from natural disasters, accidents, or sabotage can disrupt connectivity.

Private networks require regular maintenance and upgrades to remain secure and efficient. This ongoing requirement can be resource-intensive. Private networks might have limited bandwidth, storage, and processing power, unlike public networks, which can offer vast resources due to their size and multiple stakeholders.

Internet Protocols such as TCP and UDP. Standard TCP is more reliable but slower, while UDP is faster but doesn’t guarantee reliability — a packet will just be dropped if it’s not received without notification to the sender.

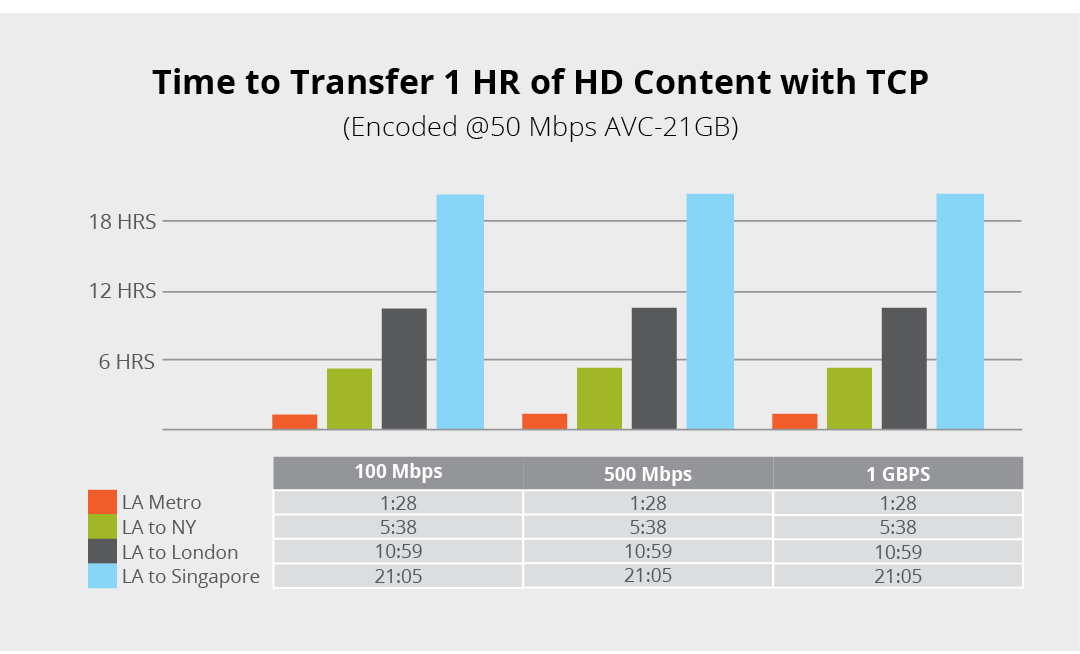

Latency is the time it takes to travel from one point to another and can have a huge impact considering the indirect path data takes, routing and switching overhead, and large numbers of round trips required by standard protocols to complete simple operations.

Destination

A file’s destination also impacts the speed of sending large files — subject to the same storage speeds, cables and ports, and internal internet traffic as the rest of the journey. A file may have traveled in the fastest most efficient route possible, but if the destination has network congestion, slow connections and is downloaded to slow storage,

All in all, the bigger the files, the longer the distances, or the more congested the network, the more the performance will be impacted — and in some cases, by a factor of 100!

In August 2023, IBS World, a global industry research company, identified video post-production services in the U.S. as the top industry affected by Internet traffic volumes. They noted that “a greater number of entertainment websites will require the services of post-production companies to provide viewers with a greater volume of content on short deadlines.”

Bandwidth Bottlenecks for Sending Large Files

The common assumption that increased network bandwidth directly results in faster file transfers is not entirely accurate, as network bandwidth and network throughput are distinct concepts. For instance, a network with a bandwidth of 1Gb/s might theoretically transfer one gigabit per second, but this is contingent on an unobstructed network — akin to an empty highway — and the transfer software’s capability to fully utilize the available bandwidth.

When transferring media files over great distances or through congested networks, the actual throughput often falls short of the theoretical bandwidth. This discrepancy is due to limitations in standard internet transfer methods. Large file transfers over long distances are prone to delays and potential failures, influenced by network latency and the way internet protocols handle such latency. The greater the distance between the sender and the receiver, the more the transfer is susceptible to latency effects.

With standard TCP (Transmission Control Protocol), even a moderate increase in network traffic can significantly slow down the transfer process, while heavy traffic can lead to a complete standstill. This is because standard TCP involves sending small data packets and then waiting for an acknowledgment from the destination system, which accumulates latency rapidly with each roundtrip. On the other hand, UDP (User Datagram Protocol) transmits packets in a non-sequential order and without flow control, which means packets can be dropped if not received. Although UDP can be faster than TCP, it is generally less reliable for data transfer due to its standard implementation.

Hidden Costs for Sending Large Files

The size of a file significantly influences the speed and potential unforeseen expenses associated with its transmission over the Internet. The speed at which large files are transferred is affected by the available bandwidth and the chosen method or service for the transfer.

Different protocols for file transfer exhibit varied efficiencies in handling large files. Larger files are more prone to packet loss, leading to incomplete or corrupted transfers requiring time-consuming retransmissions. Moreover, depending on the chosen service, the transmission of large files can lead to unexpected data charges, particularly if users exceed their plan’s data limits.

Large File Data Integrity & Security Needs

Security and data integrity are crucial when sending large files rather than just focusing on speed and cost. This is particularly important in the media and entertainment industry, where compromised files can lead to significant intellectual property and financial losses. File transfer tools must include:

- Authentication to ensure users are who they say they are.

- Authorization to ensure that users and automated processes have access to approval assets.

- Data Confidentiality to protect data from unwanted disclosure.

- Data Integrity to ensure data cannot be corrupted.

- Non-repudiation to make sure users cannot deny having taken an action.

- Availability to protect from interrupted access.

- Third-party assessment by reputable agencies such as the Trusted Partner Network (TPN) or the Independent Security Evaluators (ISE).

Building secure software requires a strong understanding of both the threats you’re protecting against and secure design principles. Otherwise, security functions can be implemented in ways that don’t actually protect assets from threats.

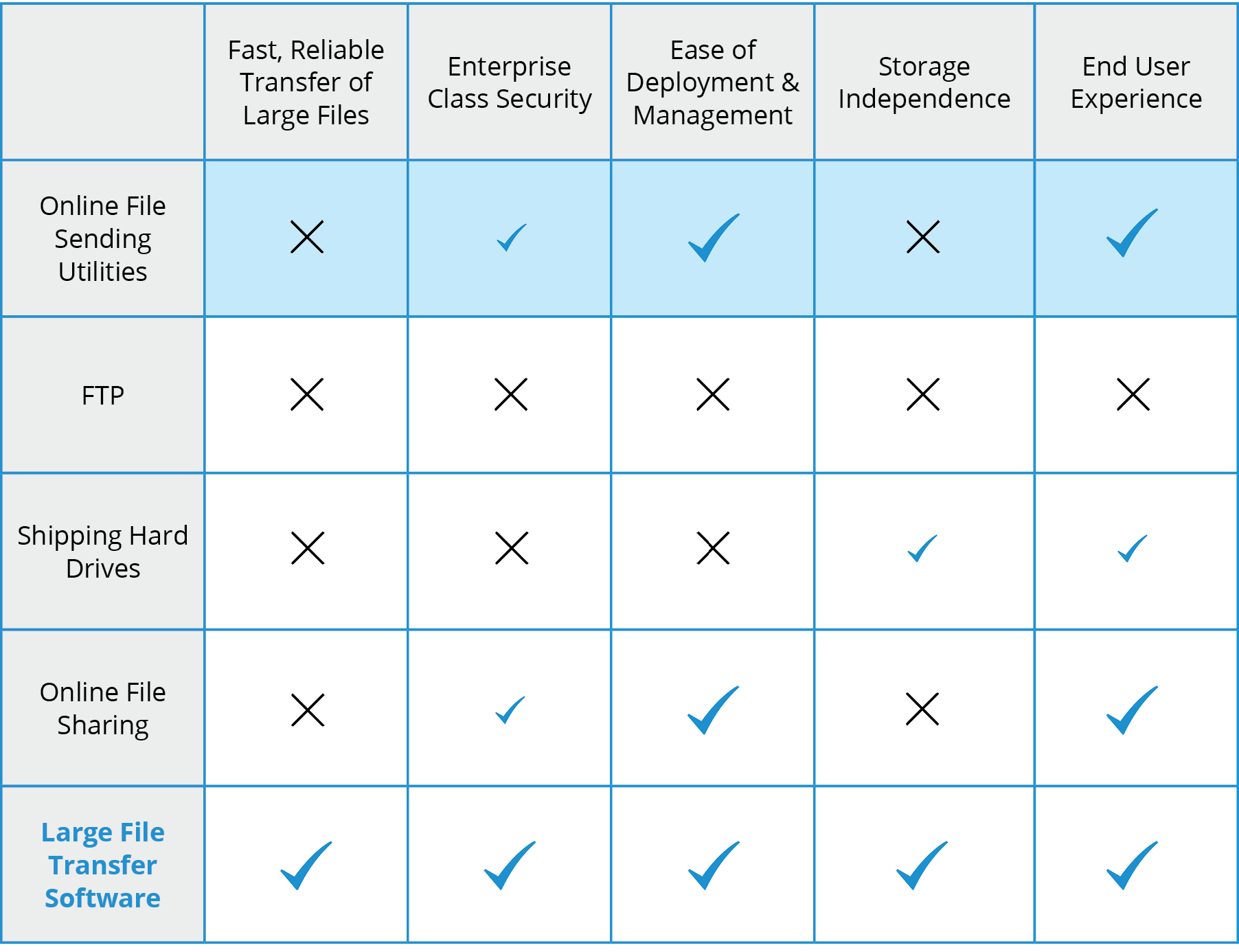

Tools & Techniques for Sending Large Files

Currently, various methods exist for quickly sending large files, with most relying on the public internet. Despite the advent of modern accelerated file transfer technologies, traditional practices like shipping hard drives and using FTP (File Transfer Protocol) remain common in many organizations. Cloud-based services such as Dropbox, WeTransfer, and Box have gained popularity for their ease of use, but they require uploading files to third-party servers, which means users must relinquish control over their data.

Traditional Tools

Alternatively, there are subscription-based cloud services specifically designed to send large files rapidly via the Internet. These services leverage advanced acceleration technologies, allowing users to maintain control over their files throughout the transfer process.

This modern software effectively merges the speed advantages of UDP (User Datagram Protocol) with the reliability of TCP (Transmission Control Protocol) and other performance enhancements to provide a more efficient and secure file transfer solution.

Advanced Transfer Solutions: The Signiant Platform Advantage

The Signiant Platform is a comprehensive solution designed to efficiently, securely, and rapidly send large files and data sets across networks. It caters to the needs of media companies and various businesses by offering two key products: Media Shuttle and Jet.

Media Shuttle: Media Shuttle is the easiest and most reliable way for people to send any size of file, anywhere, fast. With Shuttle, end-users can securely access and share media assets from any storage, on-premises or in the cloud. With over 1,000,000 global end-users, Media Shuttle is the de facto industry standard for person-initiated file transfers.

Jet: Signiant Jet is a powerfully simple solution for automated file transfers between locations, between partners, and to and from the cloud. Backed by Signiant’s patented intelligent transport, Jet is capable of multi-Gbps transfer speeds, supports hot folders and scheduled jobs, and can easily incorporate into more complex operations with Jet’s API.

The Signiant Platform’s patented file acceleration technology significantly reduces latency and uses available bandwidth efficiently, enabling transfers up to 100 times faster than standard methods like FTP. It has robust security measures, including Transport Layer Security (TLS), and features Checkpoint Restart for automatically resuming interrupted transfers. It is a preferred tool among Hollywood studios, broadcasters, and sports leagues for its security and efficiency.

Case Study Spotlight: DigitalFilm Tree

LA-based post-production facility, DigitalFilm Tree, delivers 5 to 12 terabytes per day using Media Shuttle to and from many locations with various internet connectivity within 8-12 hours of uploading across multiple projects. Ted Lasso, Shrinking, The Umbrella Academy and Manifest are some programs DigitalFilm Tree services. Uniquely, DigitalFilm Tree provides originally shot footage — often acquired in a RAW format — to producers, artists and partners wherever they are in the world.

“It’s routine to shoot five terabytes a day,” CEO and founder Ramy Katrib said. “Thanks to Media Shuttle, we distribute that to anywhere in the world as a matter of routine.”

Media Shuttle is trusted to distribute this massive, valuable media anywhere in the world across all post parameters, whether it’s VFX, color, online, final delivery, or final sign-off.

“It is a backbone of our daily operations,” said Nancy Jundi, COO and CFO. “Media Shuttle, for us, is incredibly ubiquitous. It’s in everything, like water.”

Choosing the Right Tool to Send Large Files

There is no shortage of ways to send large files — FTP, Online file-sharing software, professional enterprise solutions. Moving files across short or long distances, between companies, to people or across time zones is a growing challenge, and traditional file movement technology is no longer adequate.

Just as most businesses today can’t operate without the Internet, media companies are realizing an essential need for large file transfer solutions — advanced technology that makes it easy to transfer any size file with speed and security over standard IP networks, no matter how far across the globe they need to go. If you’re looking to purchase large file transfer software for your business, it’s important to understand the market drivers that have created the need, the available options for moving media files, and some key requirements to look for.

The benefits of using a fit-for-purpose tool like the Signiant Platform to transfer large video files in mission-critical environments cannot be understated. Speed, reliability, and security top the list, but many other factors come into play, such as ease of use, easy onboarding, administration tools, checkpoint restart, etc.

Most other file transfer tools, typically older, were not built with today’s large and growing video file sizes in mind. They are subject to loose security measures or none at all. Poor speed and reliability defeat the purpose and end up costing more time and resources in the end.